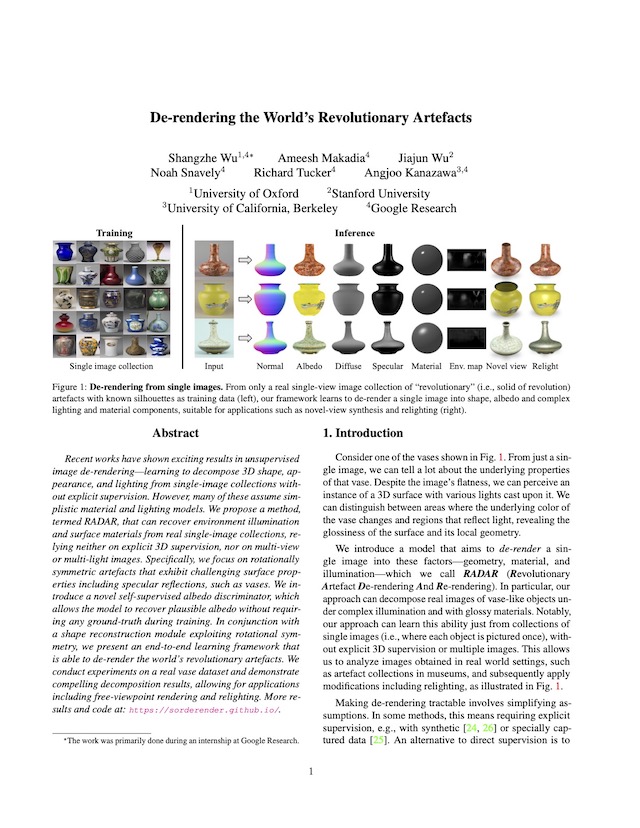

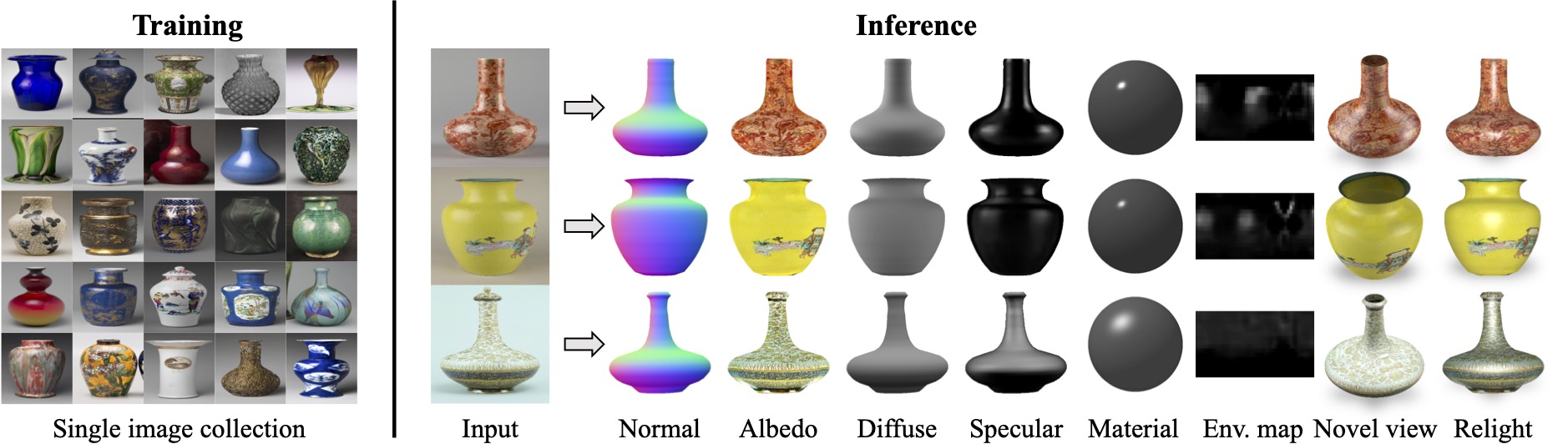

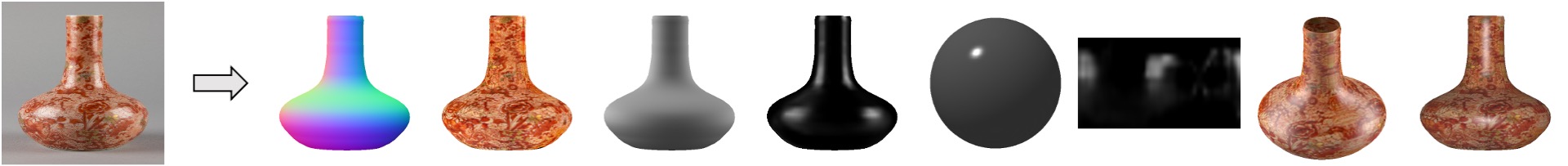

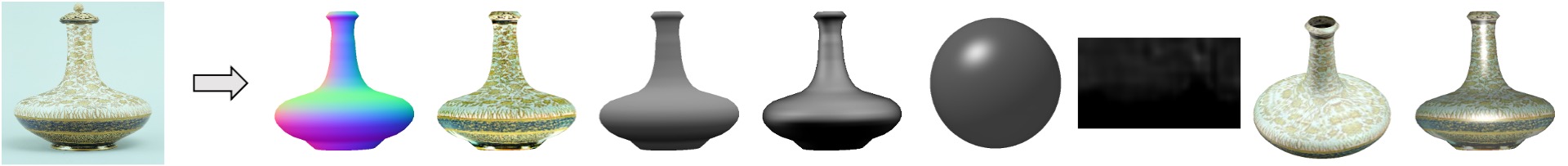

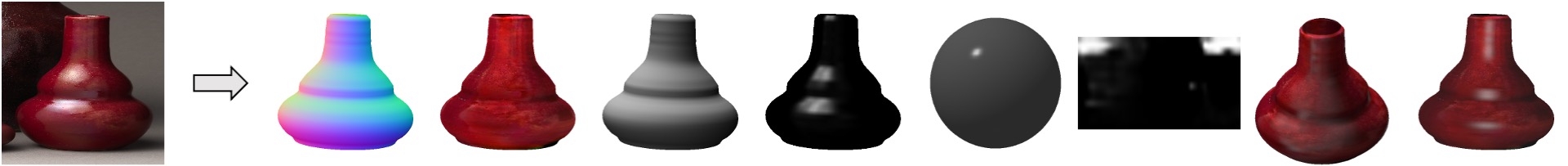

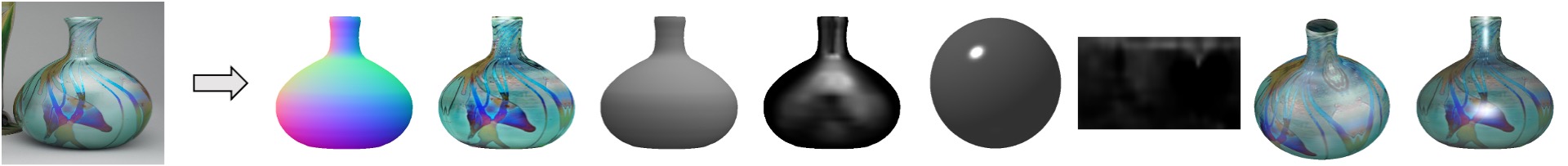

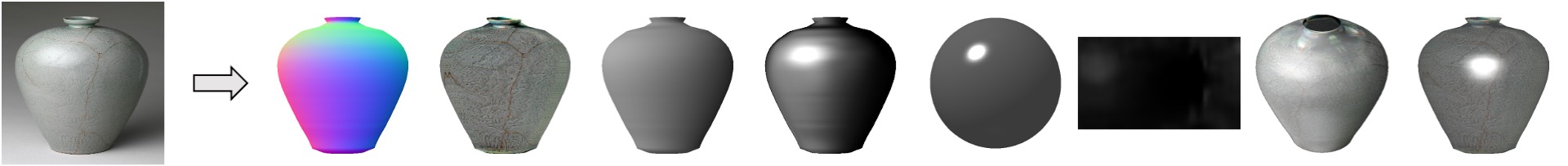

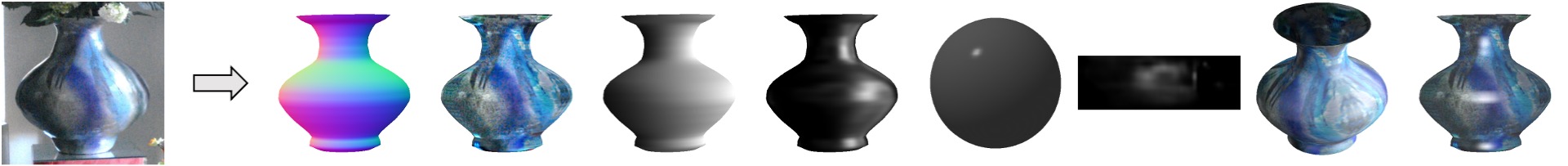

Given only a real single-view image collection of "revolutionary" (i.e., solid of revolution) artefacts with known silhouettes as training data (left), our framework learns to de-render a single image into shape, albedo and complex lighting and material components, allowing for novel-view synthesis and relighting (right).

* This work was primarily done while Shangzhe Wu was interning at Google Research.

Abstract

Recent works have shown exciting results in unsupervised image de-rendering -- learning to decompose 3D shape, appearance, and lighting from single-image collections without explicit supervision. However, many of these assume simplistic material and lighting models. We propose a method, termed RADAR† (Revolutionary Artefact De-rendering And Re-rendering), that can recover environment illumination and surface materials from real single-image collections, relying neither on explicit 3D supervision, nor on multi-view or multi-light images. Specifically, we focus on rotationally symmetric artefacts that exhibit challenging surface properties including specular reflections, such as vases. We introduce a novel self-supervised albedo discriminator, which allows the model to recover plausible albedo without requiring any ground-truth during training. In conjunction with a shape reconstruction module exploiting rotational symmetry, we present an end-to-end learning framework that is able to de-render the world's revolutionary artefacts. We conduct experiments on a real vase dataset and demonstrate compelling decomposition results, allowing for applications including free-viewpoint rendering and relighting.

† Note the name itself is a "revolutionary" palindrome.

Video

Results

De-rendering from a single image

Novel view rendering and relighting from a single image

images from The Metropolitan Museum of Art Collection

images from Open Images Dataset

Paper

De-rendering the World's Revolutionary Artefacts

Shangzhe Wu, Ameesh Makadia, Jiajun Wu, Noah Snavely, Richard Tucker, and Angjoo Kanazawa

In CVPR 2021

@InProceedings{wu2021derender,

author={Shangzhe Wu and Ameesh Makadia and Jiajun Wu and Noah Snavely and Richard Tucker and Angjoo Kanazawa},

title={De-rendering the World's Revolutionary Artefacts},

booktitle = {CVPR},

year = {2021}

}Acknowledgements

We would like to thank Christian Rupprecht, Soumyadip Sengupta, Manmohan Chandraker and Andrea Vedaldi for insightful discussions. The webpage template was adapted from Richard Zhang's and Jason Zhang's templates.